|

|

|

As the summer approached I became more and more conflicted as to how to assess my students. Many options existed including timed, self grading quizzes in Google Forms, video assessments via FlipGrid, etc. To simplify things, I elected to use a Google Doc template for all assessments where students completed responses and calculations and then shared the doc with me for assessment.

Inherent to the above process was TRUSTING that students would do the work individually, without notes or text, given that no systems existed within the technology I was using to ensure individual work. Moreover, the thought of doing live assessments over Zoom where I could see students working via web cam did not sit well with me. That being said, I still had a hunch, that some student answers, primarily questions that were algorithmic in nature (Stoichiometry, etc.), were not authentic. During the last few weeks of distance learning, I created new format/template for all calculation-based questions that, in addition to requiring a correct numerical answer, also involved reflection in a such a way that could not be "Googled", forcing students to reflect on their understanding of the process they employed to arrive at their answer AND a conceptual explanation of the phenomena at hand. Essentially, I was trying to create a meaningful format for assessing in the distance learning setting driven by the question structure NOT the technology. Below is an example of an initial question, and then a modified version that incorporates a second meta-reflection piece to assess for potential (for a lack of better word) "cheating" in the distance learning setting: Initial Question Example

Modified Question Example

The template used in the modified question above can be layered on top of any calculation in my chemistry class. With respect to grading, I chose to award 1 pt for the correct answer to #1 in the modified version, and 4 points for the meta-reflection piece. One point for each answer within the template. The modified process accomplished two things: First, it added an assessment where, even if the calculation portion was one gathered via a system such a Wolfram Alpha, etc. (see example solution to above problem here), students would be forced to reflect on their process, something that could not be generated online. Second, by allocating more points to the reflection process, a message was sent that I valued that process more than the calculation, setting a precedent that I hope to carry into the face-to-face instruction...whenever that resumes. Full disclosure: The modified version is MUCH harder to grade. So yeah, there's that :). The cognitive dissonance behind using the assessment as a learning tool. Never easy. Today I have been reflecting a lot on assessment. Specifically, whether or not there is place for a traditional "paper" assessment in my chemistry course. One of my colleagues is doing creative work around having students print copies of assessments, solve problems, take images, and submit back as PDF to be annotated and graded. The students take the assessment over Zoom in "gallery" view.

I have been using Google Forms (as I have rambled on and on about) for formative assessment, and for weekly summative assessments, doing a similar thing to my colleague mentioned above. Rather than printing assessments and doing them live over Zoom, I have been pushing out Google Doc Templates with spaces in "blue" for students to submit images of their work. "Do What's in Blue". Click here for an example template and here for a student response using the template. Although both systems seem to being work well, mine having significantly less oversight, I wondering if there isn't a much better way? I wonder if I am completely missing something here? If I'm trying to fit F2F assessment in an online learning environment? Should I be doing live video interviews for assessments? I am having great success in my Biochemistry course doing medical case studies as as the process lends itself much more to engaged critical thinking given the task (click here for case template and here for student response to template). Is there a way I could do case study analysis in chemistry? Apologies for the rambling post today that reads more like a journal entry.

I have already written during the first week of school about my excitement as I embarked on a paradigm shift in how I, and my students, think about, and record work (grades, activities, reading reflections, etc.).

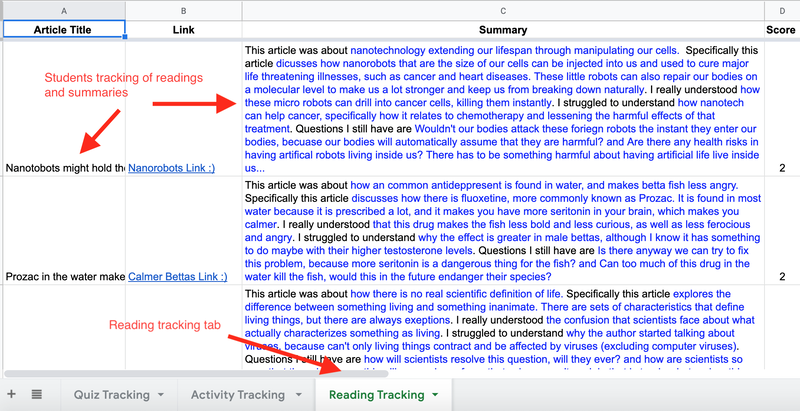

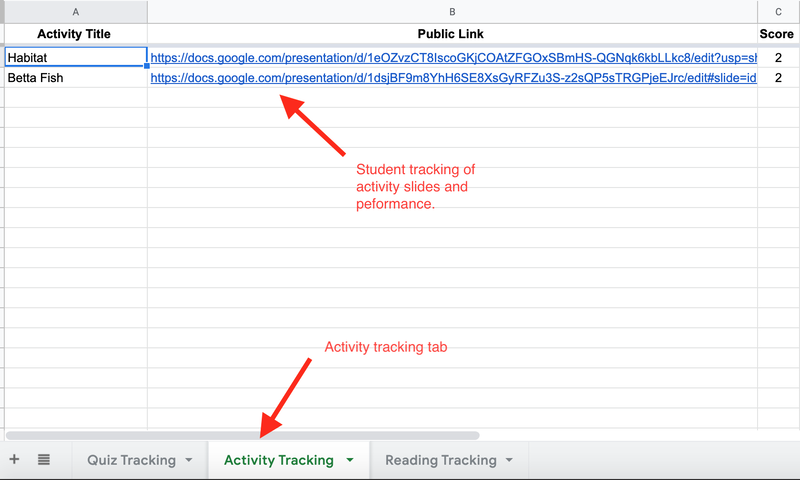

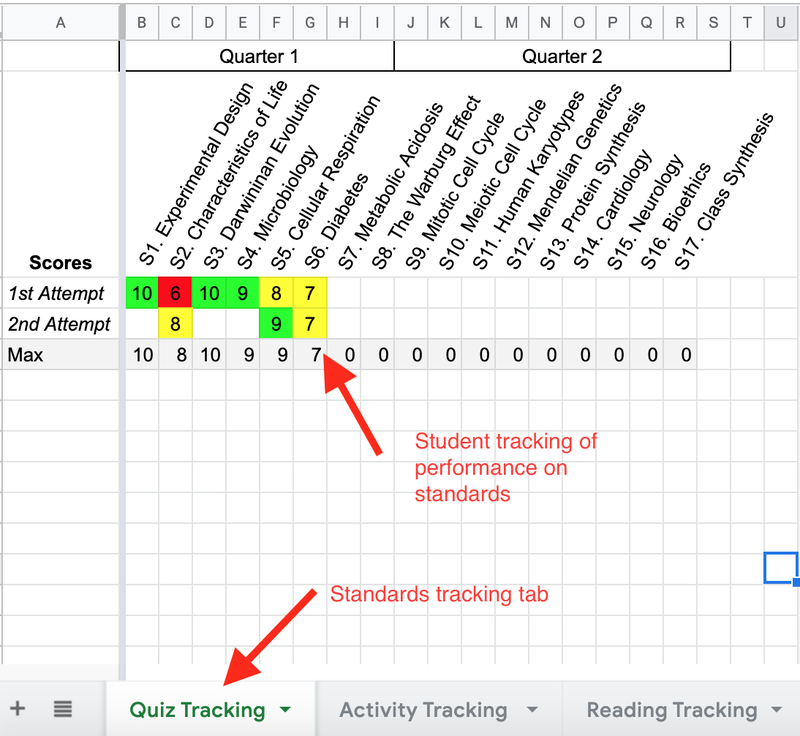

Three weeks later my excitement has turned into obsession! I am SO happy this with new process, that, for a lack of a better, less "buzzy" phrase, it feels as if I am leveraging a Google Sheet template as a "Metacognitive Portfolio". A process that, after years of tinkering, really, actually, forces "thinking about thinking" and curates work effectively. Not something I read on a blog (like this one) or something I was told to do at a workshop or faculty PD session. What I mean is this: Rather than recording grades in our grade book, students keep track of their own progress on standards, while I keep track of progress on a paper sheet, and update quarterly. BUT in addition to standards tracking, students are tracking ALL of their work in a Google Sheet using sub tabs. One tab is for tracking standards performance (autogenerated colors help students reflect reassessment needs). One tab is for links to their activity slides (click here for a previous post about this process). Finally, one tab is for students to track, in a structured way, reflections on class readings. Thus, the three things I grade: standards, activities, and readings, are now curated easily, in one place, for students to not only reflect on performance, but also catalog work. One place for students to gain awareness of performance, build pride around their body of work, and develop appreciation for their readings curated over the course of the year. Simple. No website. Easy. Effective. So far... Plus, I don't have students coming and asking "what is my grade?". I rather have students asking, "have you graded that yet so I can update to my sheet." It keeps us both honest in the best possible way. MOST IMPORTANTLY, whenever I need to access student work, I go to ONE PLACE to access it ALL! Grades, activities, readings, etc. I love it! Less tech. Better tech. Click here for the template I pushed out on the first day of class for them to track. They shared it with me, I made a folder of their sheets, and thats it. I have ALL their work. No folder, just a sheet. I love it! (Can you tell I'm excited??) See images of the system below, along with an embedded video of me reflecting, in an overly excited way after a day of teaching, on the process. (Note: this might not be life changing to any of you, but I have to share it. Simpler=Better IMO. I have tried so many things and this...this my friends...is something I'm truly pumped about!." It's like REFLECTIVE PRACTICE CHRISTMAS or something. :) I have written in the past about using Google Slides as a lab reporting tool in my courses. Since writing about this process last year I have COMPLETELY embraced this method.

As a I wrote before, images, video, diagrams, etc., can all be captured easily, live conclusion presentations are seamless, hyperlinks to other resources to enhance conclusions can be added, and students can easily alter the template fonts and design to match their own vision for the report (so much more can be said). This school year I have decided to streamline the process, adding instructions, embedded video, and rubrics to the slide template students will work in. Click here and here for a few template examples and here and here for associate student products. A short post, but given the efficacy of this subtle instructional strategy, I felt it was worth sharing again! Ever since I read amazing physics instructor Frank Noschese's writngs on Standards Based Grading (SBG), I have been obsessed with figuring out a system that works for me.

This 2011 blog outlines my initial attempt. This 2018 blog outlines one of many subsequent revisions. Today, day 1 of the 2019-2020 school year, and my 19th year in the classroom, I find myself reinventing the SBG wheel once again. I am committed to the process, or some eventual variation of the process for three primary reasons:

Each iteration is catalyzed by some aspect of the above three rules falling short. Either I have, as my first attempt in 2011 demonstrates, overcomplicated the grading process (4.7/5) trying to place a 5 pt scale on a 10 pt scale, or as my 2018 post demonstrates, overcomplicated the student communication piece, forcing students to record their performance on a ridiculously complex spreadsheet. Good intentions...bad result. I think I'm on to something this year! At least that little pedagogical voice in my gut senses I'm on to something. Here's the plan:

I am hopeful that the combination of simplified, more overarching standards, a more simple and structured way for students to track performance with color codes, and limited recording of public grades with maximum student individual recording of standard performance, will be a system that works for me this year! The joys of reflective practice. |

Categories

All

Archives

March 2024

|

RSS Feed

RSS Feed